Nico Prat

Creating a loader showing Vite dev server progress

Using middlewares and the HMR API to improve the developer experience

Vite is fast (usually)#

When we switched from Webpack to Vite, we were amazed by its speed. Well, it's right in the name I guess. But sometimes when restarting our app (after a config change, new dependencies, and so on), the first load (called cold start) can take a few seconds (after all, the browser needs to load hundreds of files), or even be stuck if there's an error in the config file for instance. What's more, for now we work on remote instances through SSH + VPN, so it can worsen the situation too if our connection gets a bit slow from time to time.

Some teammates complained that it was confusing to see basically nothing without knowing if it was slow, bugged or whatever. I thought there was room for improvement there, and I remembered the first time I used Nuxt: the developer experience felt very polished on this point. Actually the first screen is a progress bar showing the client & server being built, which is a very nice attention to details in my opinion:

I think it's not used anymore, but we can still look through their code in the Nuxt Loading Screen repository. There's no build phase when Vite serves files for development (which is its whole point by the way), but I thought we could still achieve something similar.

First try, using service workers (failure)#

The goal was to keep it simple, so I tried to do the whole thing in the browser. The only way I knew to spy on XHR calls client-side is to catch them through a service worker. I never worked with it until then and had to read a lot to understand how it works, and why it looks so complicated.

After a lot of attempts, I finally got something kind of working. But at this point, it seemed fragile and hard to maintain:

- service workers require a lot of boilerplate code

- must live in a separate publicly available file

- has to be registered into the browser (so they don't work at all at first load...)

- and rely on very specific knowledge (like the self global scope object).

What's more, every fetch was first caught and then forwarded, so the network tab in the devtools was spammed with twice as much requests as before. It's possible to filter them with -is:service-worker-intercepted (Chrome) but every teammate would have to keep it on their own.

I didn't feel confident to keep this in our codebase, so I kept looking for other solutions.

Playing with Vite APIs (success)#

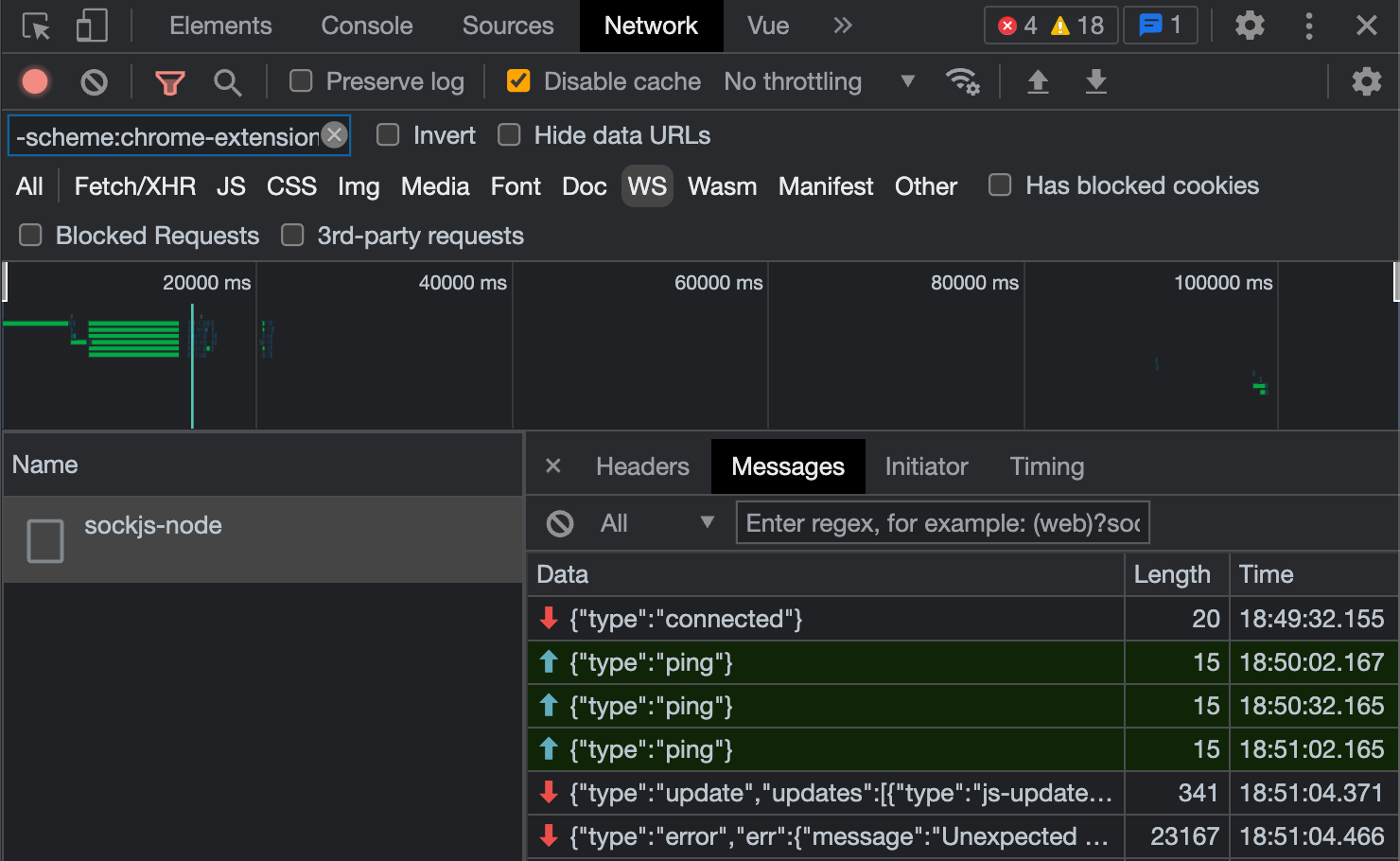

I then remembered that Vite was using websockets to communicate between the development server and the browser to replace files on the fly (HMR) or display the error overlay. Actually we can inspect them in the network tab of our devtools:

I thought we could use this system to spy on file serving: the idea was simply to keep count of every file call keyed by its file name, and keep track on which one has finished, like this:

const queue = {

'foo.css': false, // unfinished call

'bar.js': true, // finished call

}

const everything = Object.values(queue)

const loaded = everything.filter(Boolean)

const ratio = loaded.length / everything.length

However with this solution, we had to implement things in both frontend and backend sides, but we could rely on tools provided by Vite itself, so it's more robust.

Backend#

It took me some time to finally find the right way to do it, but a custom plugin seemed to be the way to go. We can use the configureServer option to create a middleware that gives access to the server and sends custom events.

We only have to find a way to keep track of individual calls and their progress. Fortunately, the request object has a originalUrl that is unique, and the response object provides an event listener. So the whole thing can be summarized like this:

plugins: [

configureServer: (server) => {

server.middlewares.use((req, res, next) => {

server.ws.send({

type: 'custom',

event: 'loading',

data: req.originalUrl,

});

res.on('finish', () => {

server.ws.send({

type: 'custom',

event: 'loaded',

data: req.originalUrl,

});

});

next();

});

},

]

Don't forget to call next() to make sure other middlewares are called afterwards.

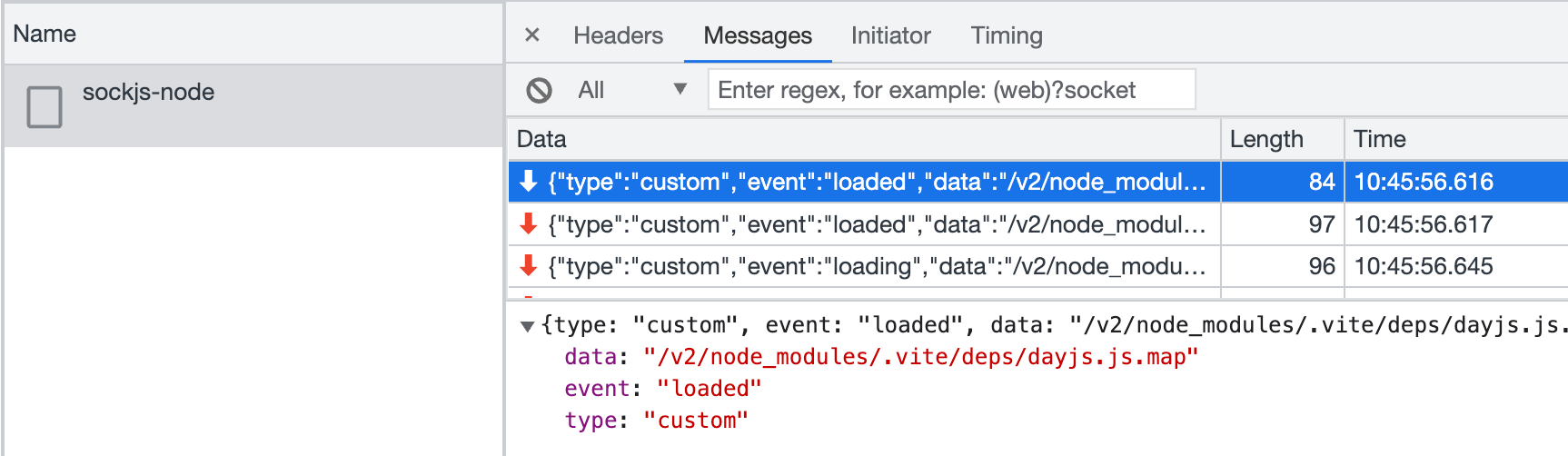

Now we can see all those custom events being sent through websockets to our client:

Frontend#

Ok so now that we have all the data we need, let's see how we can catch them in the browser. The Vite documentation offers a lot of information about the internal API import.meta.hot. It looks like the simplest way is to listen for events through hot.on(event, callback).

Since our app is not initialized yet, we have to add our script as soon as possible, meaning we'll have to do it in our index.html file like in the good old days, like this:

<script type="module">

// type="module" is mandatory to use import.meta.hot

if (import.meta.hot) {

const queue = {}

const update = () => {

const everything = Object.values(queue)

const loaded = everything.filter(Boolean)

// Do anything: show a counter, a progress bar, a spinner...

}

import.meta.hot.on('loading', (data) => {

queue[data] = false

update()

})

import.meta.hot.on('loaded', (data) => {

queue[data] = true

update()

})

}

</script>

For our needs, we only display this loader along the usual one shown to users for the first load, so it looks like this:

We can see here that it usually takes a few seconds to completely fetch the first load. Nothing prevents you to use this technique to show a loader for following fetches, but it felt superfluous as it's then generally very fast anyway.

Conclusion#

It's a small addition, but from a developer experience perspective, it improves the feedback of our app which we spend hours on everyday, so it can have a big impact overall across the team!

The only downside is that we can't use this technique for production environment because our app is not served by Vite anymore, but as regular static files. Anyway, performances are a lot better in this situation, so it didn't feel necessary.